What I learned about Rust on my 4th of July Vacation this Year

In Search of a small Cross-Platform CloudWatch Log Shipper

In Search of a small Cross-Platform CloudWatch Log Shipper

I’ve been using the Python awslogs agent for quite a while now on a variety of different operating systems and architectures from Debian, Ubuntu, AWS Linux on both 386, AMD 64, and various ARM processors on my home Raspberry Pi’s, but I’ve always wanted a simple single binary that doesn’t require a large number of dependencies.

You’d think someone has written one in Golang, but “No!” There is nothing, or at least the last time I looked. This syslog to cloudwatch bridge sort of worked a few years ago but I just want something simple that allows me to specify local files and forward upstream. Do what the current Python agent does but use only a single binary.

Enter Rust. A few months ago I gave it a try and build some simple “Hello World” apps and installed Cargo on my Pi 3B’s and it seemed to just work. It was also a hell of a lot faster than .NET Core.

I thought awatchlog had potential but it looks still to early and it failed the 10 minute test.

Then I remembered Pete Cheslock’s tweet about Vector a few days ago and decided to give it a try. Vector sort of reminds me of telegraf but it is much more than that and the idea of forwarding events to S3 or CloudWatch Logs is appealing, let alone all the other capabilities it has.

In my typical fashion, I didn’t really read the documentation, I just started fumbling around with a compile on my new Raspberry PI 4B with only 1GB RAM. What could go wrong given I’ve hardly done anything with Rust and it has been months since I’ve compiled anything of note from source.

Opening up Cargo

Since this was a new server, I had to install cargo and then I downloaded Vector 0.3.0 from a zip file. As usual, I didn’t read the docs I just started trying different make commands from “bench” and “test” which included over 480 dependencies which have to be downloaded and compiled.

And this is when things started breaking.

The jemalloc crate started failing to build and got some obscure (and quite scary looking) gcc (or rustc) errors. Next it was the leveldb crate that failed.

Were there issues compiling these libraries on armv7l?

What was the problem? I started with leveldb. How was it used? Could it be disabled?pi@pi4b-7587c0da:~/vector-0.3.0/src $ grep -r leveldb *

buffers/mod.rs:#[cfg(feature = "leveldb")]

buffers/mod.rs: #[cfg(feature = "leveldb")]

buffers/mod.rs: #[cfg(feature = "leveldb")]

buffers/mod.rs: #[cfg(feature = "leveldb")]

buffers/mod.rs: #[cfg_attr(not(feature = "leveldb"), allow(unused))]

buffers/mod.rs: #[cfg(feature = "leveldb")]

buffers/disk.rs:#![cfg(feature = "leveldb")]

buffers/disk.rs:use leveldb::database::{

buffers/disk.rs:// Writebatch isn't Send, but the leveldb docs explicitly say that it's okay to share across threads

buffers/disk.rs:// Writebatch isn't Send, but the leveldb docs explicitly say that it's okay to share across threads

(Before going down this path, I remembered that AWS now has ARM instances and I was curious to see how it would compile on EC2 with 4GB RAM. I ran into the same leveldb compilation error and an a1 instance wasn’t noticeably faster than my Pi, so I went back to a local system — also because the ARM EC2 instances are only RedHat derivatives.)

Finding this “feature” in the code lead me to conditional compilation which led me back to the Cargo.toml file.[features]

default = ["rdkafka", "leveldb"]

docker = [

"cloudwatch-integration-tests",

"es-integration-tests",

"kafka-integration-tests",

"kinesis-integration-tests",

"s3-integration-tests",

"splunk-integration-tests",

]

cloudwatch-integration-tests = []

es-integration-tests = []

kafka-integration-tests = []

kinesis-integration-tests = []

s3-integration-tests = []

splunk-integration-tests = []

Now we were getting somewhere!

Bingo. LevelDB was an optional, so I set default to [] and tried compiling again. This time doing a cargo build within src. Basically you can issue cargo commands anywhere and it will just work. No Makefile to deal with.

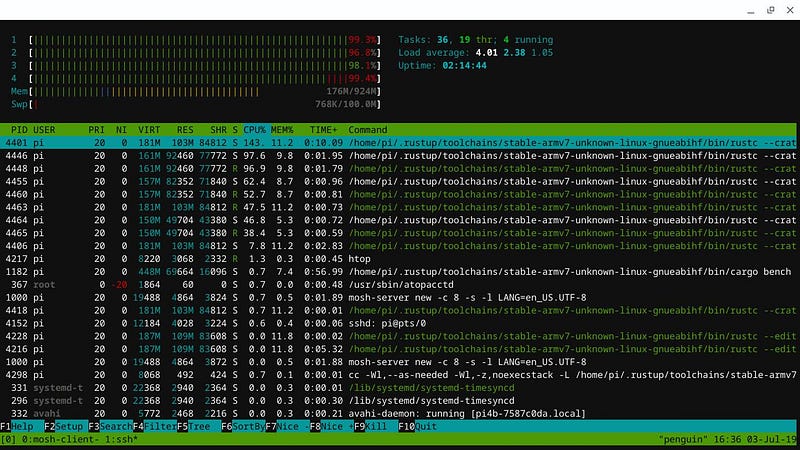

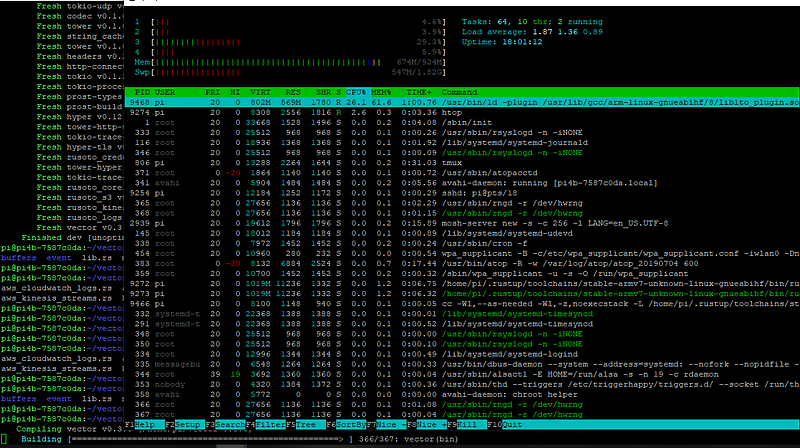

Spending hours compiling today, I saw that cargo parallelizes the compilation of the dependencies (after I removed kafka and leveldb) it went down to 397 crates that had to be built. It appears to be based on the number of cores and it definitely saturated the CPU. But I was making progress.

The rest of the compile errors I ran into, I was able to resolve by adding the necessary Raspbian Buster library and packages for OpenSSL, Google Protocol Buffers, and jemalloc2.

I was almost done until the last step building (and I assume linking) the actual binary. Given I was watching memory usage I saw that the Pi was starting to swap. The default PI swap configuration is only 100MB so I set CONF_MAXSWAP to 2048 and that seemed to be sufficient.

I was curious to see how long the final stage of the build took and modifying a single line resulted in nearly 7 minute compile time:pi@pi4b-7587c0da:~/vector-0.3.0/src/sinks $ cargo build

Compiling vector v0.3.0 (/home/pi/vector-0.3.0)

Finished dev [unoptimized + debuginfo] target(s) in 6m 56s

This is the best case for a ARM build so pretty slow, but it gets worse!

Recompiling everything with the “release” build resulted in a 63 minute compile time, because all the 396 modules had to be recompiled with optimizations.pi@pi4b-7587c0da:~/vector-0.3.0/target/debug $ ls -alh vector

-rwxr-xr-x 2 pi pi 226M Jul 4 19:29 vector

pi@pi4b-7587c0da:~/vector-0.3.0/target/debug $ file ../release/vector

../release/vector: ELF 32-bit LSB shared object, ARM, EABI5 version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-armhf.so.3, for GNU/Linux 3.2.0, BuildID[sha1]=1b074605a8d734fb97184ed34656d476fd19c576, with debug_info, not stripped

pi@pi4b-7587c0da:~/vector-0.3.0/target/debug $ ls -alh ../release/vector

-rwxr-xr-x 2 pi pi 22M Jul 4 20:46 ../release/vector

pi@pi4b-7587c0da:~/vector-0.3.0/target/debug $ strip ../release/vector

pi@pi4b-7587c0da:~/vector-0.3.0/target/debug $ ls -alh ../release/vector

-rwxr-xr-x 2 pi pi 12M Jul 4 20:50 ../release/vector

But you end up with a very small single 12MB binary.

So yeah, mission accomplished!

Putting Vector to the Test

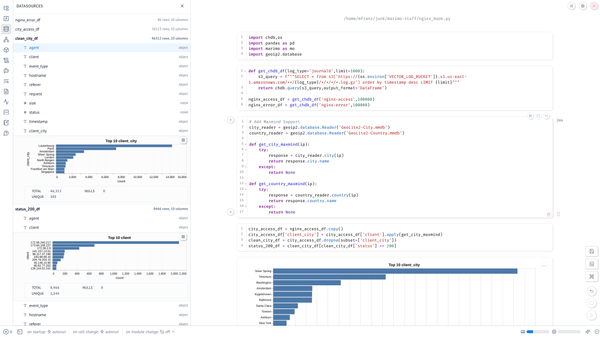

Let’s create a minimal configuration file and see if we can get logs to ship to AWS.

I’ll admit, here is where I went to the documentation, which is quite good for this stage of the project. I knew I wanted to use a file source and a cloudwatch logs sink.[sources.logs]

type = 'file'

include = ['/var/log/auth.log']

start_at_beginning = false

ignore_older = 3600[sinks.my_aws_cloudwatch_logs_sink]

# REQUIRED - General

type = "aws_cloudwatch_logs"

inputs = ["logs"]

group_name = "/var/log/auth.log"

region = "us-east-1"

stream_name = "pi3b-82af77e3"

(NOTE: It will fail if the log stream doesn’t exist but, that will be addressed soon in the project.)

Running with 2x verbose mode, there are no surprises.root@pi3b-82af77e3:/home/pi# ./vector -vvc vector.toml

INFO vector: Log level "trace" is enabled.

INFO vector: Loading config. path="vector.toml"

TRACE vector: Parsing config. path="vector.toml"

INFO vector: Vector is starting. version="0.3.0" git_version="" released="Thu, 04 Jul 2019 19:21:22 GMT" arch="arm"

INFO vector::topology: Running healthchecks.

INFO vector::topology: Starting source "logs"

INFO vector::topology: Starting sink "my_aws_cloudwatch_logs_sink"

INFO source{name="logs"}: vector::sources::file: Starting file server. include=["/var/log/auth.log"] exclude=[]

INFO source{name="logs"}:file-server: file_source::file_server: Found file to watch. path="/var/log/auth.log" start_at_beginning=false start_of_run=true

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

INFO healthcheck{name="my_aws_cloudwatch_logs_sink"}: vector::topology::builder: Healthcheck: Passed.

TRACE source{name="logs"}:file-server: file_source::file_server: TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=107

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=107

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=96

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE sink{name="my_aws_cloudwatch_logs_sink"}: vector::sinks::util: request succeeded: ()

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=96

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE sink{name="my_aws_cloudwatch_logs_sink"}: vector::sinks::util: request succeeded: ()

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=101

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=78

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE sink{name="my_aws_cloudwatch_logs_sink"}: vector::sinks::util: request succeeded: ()

TRACE source{name="logs"}:file-server: file_source::file_server: Continue watching file. path="/var/log/auth.log"

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=94

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=105

TRACE source{name="logs"}:file-server: file_source::file_server: Read bytes. path="/var/log/auth.log" bytes=71

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

TRACE source{name="logs"}:file-server: vector::sources::file: Recieved one event. file="/var/log/auth.log"

Continue watching file. path="/var/log/auth.log"

It works!