Using Packer and AWS CodeBuild to Automate AMI Builds

Why CodeBuild?

Why CodeBuild?

I’m no stranger to the idea of using a CI system to create OS images but until now I had never done it myself. On past teams, we’ve used Jenkins to publish (and test) images for AWS, Azure, and even VMWare for non-SaaS products. Right now I have a side-project I’m working on that I wanted some CI for my Packer and Terraform.

There are plenty of good (and possibly better) options besides Code Build, but I didn’t want to sign up for another SaaS offering or stand up a server. I still remember the pain of using AWS CodeCommit on a another team as a way to provide customer-specific repositories that didn’t “cross the streams” with our main infrastructure source code repos that were hosted in Bitbucket. It felt very hackish, in particular around authentication with SSH, but I assume things have improved because this was back in 2016.

CodeBuild was a completely different experience. It feels much more mature and most of the challenges I had getting it working were obvious mistakes on my end. Since I’ve switched to Gitlab mostly these days, my original plan was to have CodeBuild use repos hosted there, but, unfortunately, these two platforms don’t integrate yet. Why not Gitlab CI or Github Actions? Given the access required in AWS, it made more sense for me to create a personal token and grant my CodeBuild process to my Github repo (which is already public) than vice versa.

While many mid-sized organizations (or larger) host their source code internally on Gitlab or GitHub Enterprise, I suspect some smaller shops use the SaaS versions of these platforms so this could be a consideration as well. Source code is one thing, privileged cloud access is another. I’ll get back to this topic at the end but you should be wary of extending your permissions outside of AWS Services.

Initial Setup Issues

Using this AWS Blog from 2017 as a starting point, I set up my first project without really reading much else. It did not go smoothly as it should have, but I don’t fault the service for that. I was rushing and not-caffeinated enough on a Sunday morning

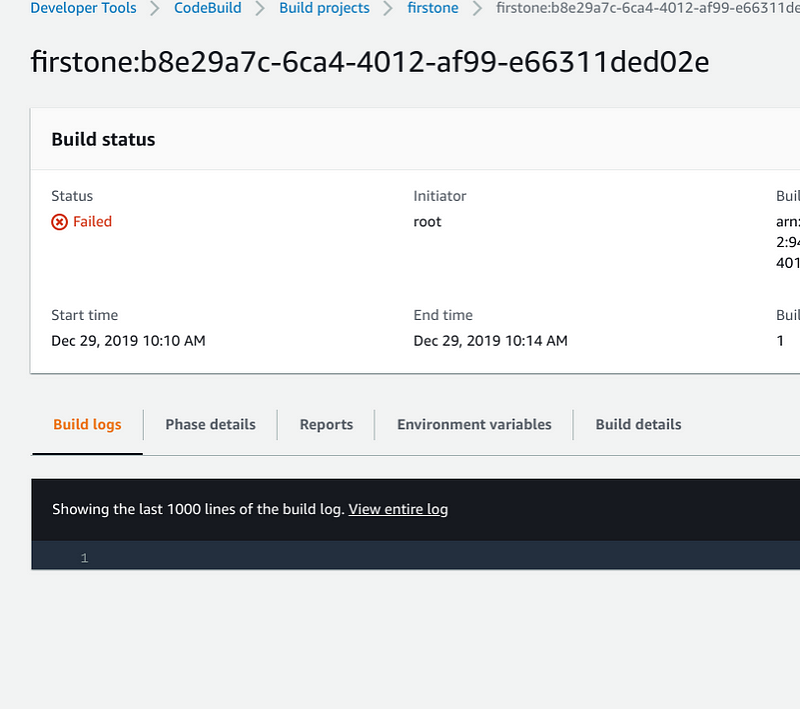

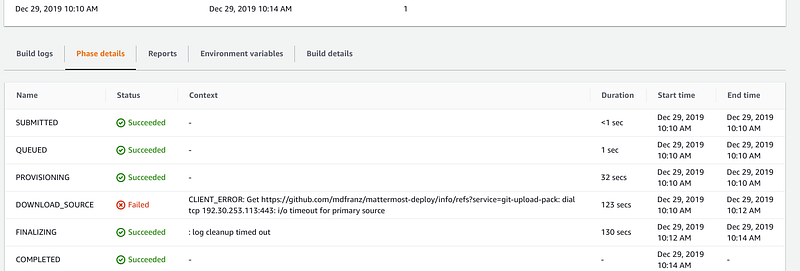

The first problem I encountered was that I assumed from the UI that I should put in a VPC. The build failed before it was even able to start because it couldn’t retrieve the spec from the repo. As a result, no errors showed up in the console or in CloudWatch Logs.

But they are there, you just need to look at “Phase details.”

Connectivity Errors to retrieve source code — I figured the instances had to be created inside a VPC so I specified a subnet as part of the infrastructure configuration. This was a mistake. The instance to the “right thing” from a security perspective and are stood up on private subnets, so they require a NAT gateway. Given this is just for a side project in my personal account I don’t want the additional codes so I just disabled VPC.CLIENT_ERROR: Get https://github.com/mdfranz/mattermost-deploy/info/refs?service=git-upload-pack: dial tcp 192.30.253.112:443: i/o timeout for primary source

My initial concern was that the token didn’t work and I was worried about how to troubleshoot this on GitHub given the limitations in the GitHub security log.

Runtime Errors — since I cut and paste the example from a 2 year old blog, it did not just work. This led me to learn about the available runtimes, which was not a bad thing but in the end I didn’t really need to specify a runtime because I wasn’t doing an install phase.YAML_FILE_ERROR Message: This build image requires selecting at least one runtime version.

By this point I could get commands to start running and I started running into issues with file paths and saw the peril’s of blindly pasting code.[Container] 2019/12/29 16:23:29 Waiting for agent ping

[Container] 2019/12/29 16:23:31 Waiting for DOWNLOAD_SOURCE

[Container] 2019/12/29 16:23:31 Phase is DOWNLOAD_SOURCE

[Container] 2019/12/29 16:23:31 CODEBUILD_SRC_DIR=/codebuild/output/src491537079/src/github.com/mdfranz/mattermost-deploy

[Container] 2019/12/29 16:23:31 YAML location is /codebuild/output/src491537079/src/github.com/mdfranz/mattermost-deploy/buildspec.yml

[Container] 2019/12/29 16:23:31 Processing environment variables

[Container] 2019/12/29 16:23:31 Moving to directory /codebuild/output/src491537079/src/github.com/mdfranz/mattermost-deploy

[Container] 2019/12/29 16:23:31 Registering with agent

[Container] 2019/12/29 16:23:31 Phases found in YAML: 4

[Container] 2019/12/29 16:23:31 POST_BUILD: 1 commands

[Container] 2019/12/29 16:23:31 PRE_BUILD: 7 commands

[Container] 2019/12/29 16:23:31 BUILD: 1 commands

[Container] 2019/12/29 16:23:31 INSTALL: 1 commands

[Container] 2019/12/29 16:23:31 Phase complete: DOWNLOAD_SOURCE State: SUCCEEDED

[Container] 2019/12/29 16:23:31 Phase context status code: Message:

This environment variable CODEBUILD_SRC_DIR is one that I didn’t catch until starting to write this blog and is important if you need to change directories as part of any the commands. Here are some other environment variables that could be useful for troubleshooting. This isn’t the full list. You can run an env in a build job to see everything.CODEBUILD_AGENT_ENDPOINT=http://127.0.0.1:7831

CODEBUILD_AUTH_TOKEN=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

CODEBUILD_BMR_URL=https://CODEBUILD_AGENT:3000

CODEBUILD_BUILD_ARN=arn:aws:codebuild:us-east-2:XXXXXXXXXXXXXXX:build/firstone:2a9104df-05da-450d-a743-d6896b9ebb82

CODEBUILD_BUILD_ID=firstone:2a9104df-05da-450d-a743-d6896b9ebb82

CODEBUILD_BUILD_IMAGE=aws/codebuild/amazonlinux2-x86_64-standard:2.0

CODEBUILD_BUILD_NUMBER=31

CODEBUILD_BUILD_SUCCEEDING=1

CODEBUILD_BUILD_URL=https://us-east-2.console.aws.amazon.com/codebuild/home?region=us-east-2#/builds/firstone:2a9104df-05da-450d-a743-d6896b9ebb82/view/new

CODEBUILD_CI=true

CODEBUILD_CONTAINER_NAME=default

CODEBUILD_EXECUTION_ROLE_BUILD=

CODEBUILD_FE_REPORT_ENDPOINT=https://codebuild.us-east-2.amazonaws.com/

CODEBUILD_GOPATH=/codebuild/output/src599011430

CODEBUILD_INITIATOR=GitHub-Hookshot/XXXXXXXXXXXX

CODEBUILD_KMS_KEY_ID=arn:aws:kms:us-east-2:XXXXXXXXXXXXXXXXX:alias/aws/s3

CODEBUILD_LAST_EXIT=0

CODEBUILD_LOG_PATH=2a9104df-05da-450d-a743-d6896b9ebb82

CODEBUILD_PROJECT_UUID=da8f7297-18b3-4736-ace7-e8e3fd60550f

CODEBUILD_RESOLVED_SOURCE_VERSION=d5755a07140b13cccc7b4207db7d6edc0e444e8f

CODEBUILD_SOURCE_REPO_URL=https://github.com/mdfranz/mattermost-deploy.git

CODEBUILD_SOURCE_VERSION=d5755a07140b13cccc7b4207db7d6edc0e444e8f

CODEBUILD_SRC_DIR=/codebuild/output/src599011430/src/github.com/mdfranz/mattermost-deploy

CODEBUILD_START_TIME=1577912884197

CODEBUILD_WEBHOOK_ACTOR_ACCOUNT_ID=XXXXXXX

CODEBUILD_WEBHOOK_EVENT=PUSH

CODEBUILD_WEBHOOK_HEAD_REF=refs/heads/master

CODEBUILD_WEBHOOK_PREV_COMMIT=16811f2919a6453b89b280f5ebe9b109dd64000f

CODEBUILD_WEBHOOK_TRIGGER=branch/master

Gotchas and Guidelines

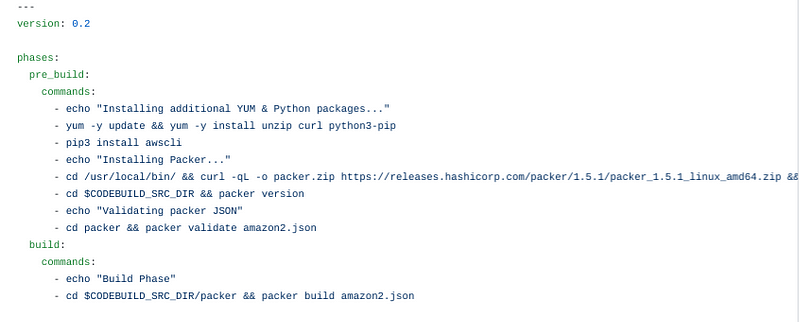

The most important parts of my spec file look like this.

If you’ve seen the gitlab-ci.yml this format won’t be too surprising. There are 4 “phases” which correspond to stages other build systems like Jenkins: pre_build, build, install, post_build. These names cannot be changed. They are not arbitrary unlike other build systems I’ve used. I would assume this syntax is more primitive than what Jenkins or Gitlab CI provides but it met my needs. Install does require a runtime but I didn’t use it so I could have avoided the runtime errors if I had not just cut and pasted the blog buildspec, but I learned something through all the errors.

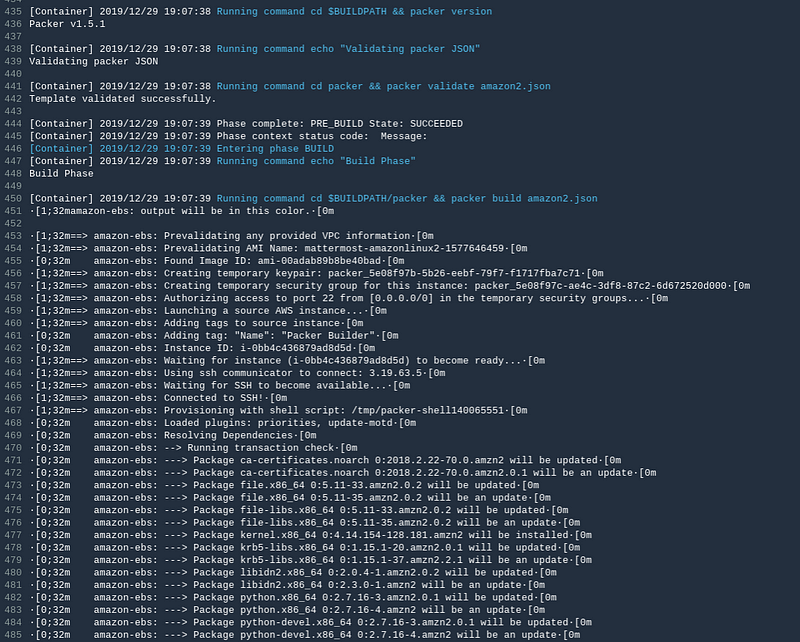

In version 0.2 of the spec file the directory location and state are preserved. For some reason, I assumed after each phase it would start clean but that was not the case. In my pre_build phase I only had to download packer (which is only available as a zip file now) uncompress it and validate my packer file.

Like most build systems the directory where your job executes is dynamic. I got flashbacks to troubleshooting Ant jobs on TeamCity in the early 2010s and your home directory is “/root” so $HOME isn’t a useful way to get back to the root of your source code. That is where $CODEBUILD_SRC_DIR comes in but I didn’t find this out until later.

Yum (unlike apt) is pretty good and waiting for other processes to complete but I did add some some delays in the script and some “sync” (because sync is sort of magic spell, who knows if it works, but it makes you feel go) and it made the builds. I would recommend using the Docker version of Amazon Linux to test any commands if you are running AWS Linux. Ubuntu 18.04 is supported as well but I didn’t want to run it. My biggest issue (like an idiot!) was trying to uncompress the packer.zip in a directory that already had packer as a directory. Maybe the unzip errors were confusing maybe I wasn’t paying attention but after I got over that hurdle it just worked.

Although blog said some jq workaround were required, I faced no issues with running Packer at all. The build job picked up my IAM permissions perfectly. I assume the same is true for Terraform but I haven’t tried yet. That is next on the list.

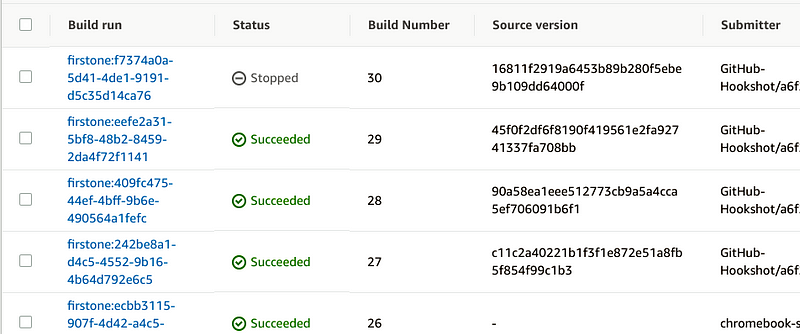

A Build System with a CLI?

I was pleased to see that the AWS CLI just worked. I was able to start and stop build and view the configuration details. When combined with the CloudWatch logs and Lambda functions you could build a lot of contraptions. Now whether you should is another matter entirely.mdfranz@penguin:~/github/mattermost-deploy$ aws codebuild start-build --project-name "firstone"

{

"build": {

"timeoutInMinutes": 60,

"secondaryArtifacts": [],

"startTime": 1577647925.387,

"buildComplete": false,

"queuedTimeoutInMinutes": 480,

"environment": {

"imagePullCredentialsType": "CODEBUILD",

"environmentVariables": [],

"type": "LINUX_CONTAINER",

"image": "aws/codebuild/amazonlinux2-x86_64-standard:2.0",

"computeType": "BUILD_GENERAL1_SMALL",

"privilegedMode": false

},

"encryptionKey": "arn:aws:kms:us-east-2:012345678910:alias/aws/s3",

"buildNumber": 25,

"projectName": "firstone",

"id": "firstone:ab8e0c6a-1982-4a5b-ad9f-505ce6b22cc8",

"arn": "arn:aws:codebuild:us-east-2:012345678910:build/firstone:ab8e0c6a-1982-4a5b-ad9f-505ce6b22cc8",

"secondarySources": [],

"currentPhase": "QUEUED",

"initiator": "chromebook-s330",

"artifacts": {

"location": ""

},

"logs": {

"cloudWatchLogsArn": "arn:aws:logs:us-east-2:012345678910:log-group:null:log-stream:null",

"deepLink": "https://console.aws.amazon.com/cloudwatch/home?region=us-east-2#logEvent:group=null;stream=null",

"s3Logs": {

"encryptionDisabled": false,

"status": "DISABLED"

},

"cloudWatchLogs": {

"status": "ENABLED"

}

You can stop builds, configure builds, see the output of builds and a whole lot more and the same operations are exposed in Boto3, but I haven’t tried it.

So What Works?

Beyond the basics of getting it running and managing jobs there were a few other things.

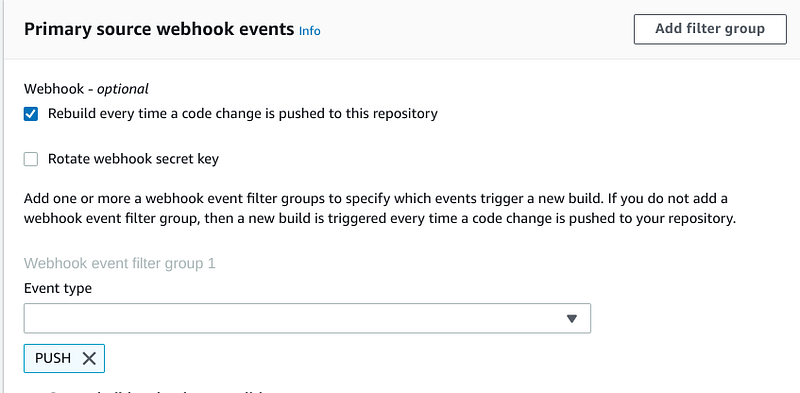

Triggering builds on a push to Github was super easy to setup although this does NOT involve Triggers. Triggers are essentially build cron jobs and are NOT based on repo activity.

In order to configure this you must specify your project as a “Repository in my Account” (not a Public Project) and then specify an Event type.

Restricting builds to a specific branch took a bit of trial and error. Some of the examples I found online didn’t have the start and EOL special characters defined and they were required for them to work. If you have this setting, it should work.aws codebuild batch-get-projects --names "firstone" | jq .projects[0].webhook.filterGroups

[

[

{

"type": "EVENT",

"pattern": "PUSH",

"excludeMatchedPattern": false

},

{

"type": "HEAD_REF",

"pattern": "^refs/heads/master$",

"excludeMatchedPattern": false

}

]

]

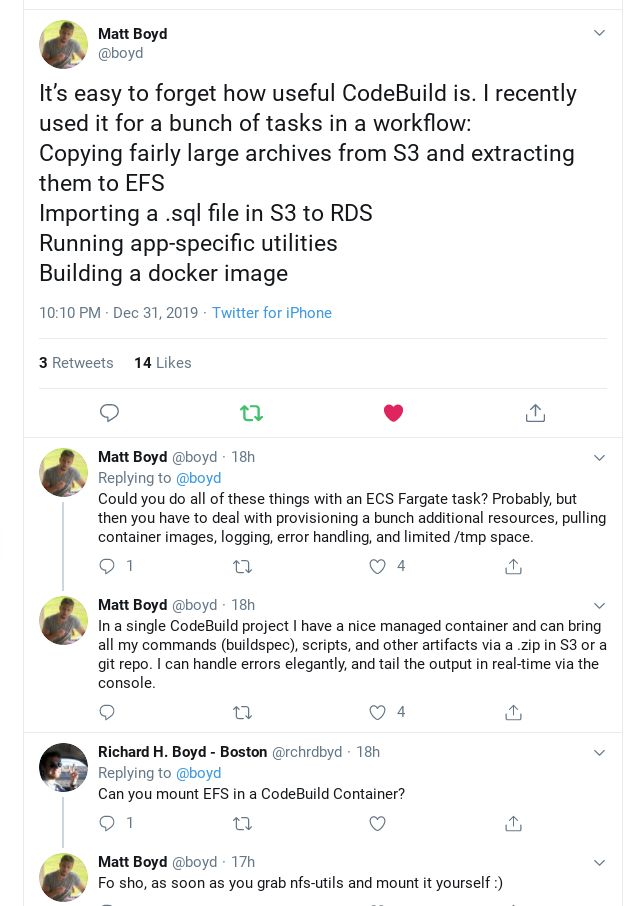

There is are many more features that I didn’t have a chance to test but the basics “just worked.” These features may or may not be good enough for complex (or parallel) workflows, but if you are putting much of your logic inside your code it may not matter. Think of these as on-demand batch jobs that you could run that do not have the limitations of Lambda functions in terms of execution time as was mentioned in this thread

Closing Thoughts

Both Jenkins and Gitlab require a lot of maintenance to keep running and keep secure. Since Gitlab is throwing every single Open Source tool into their platform (kidding, not kidding) and Jenkins has a huge plugin library that can be a challenge to keep up to date and not break your builds, using CodeBuild is appealing.

Compromises of your build systems (especially when connected to a cloud provider) are one of those nightmare scenarios that I would think about when I used to have operational and security responsibilities and I would wake up in the early morning in a cold sweat. Given the number of jobs that typically run, how would you know if artifacts were poisoned? How much are you even monitoring your CI jobs?

Your own CI tools provide such a rich attack service and if you are using your CI system to execute Terraform (or other cloud orchestration) this should definitely give you pause. Not only do you have to protect the Web API and the UI. You have to get the Project-level RBAC just right, which is not easy and you will be tempted to give less restrictive permissions to developers than you should.

I haven’t fully looked at project level IAM permissions but I assume you can give granular access at the project level and then each project can have different IAM service roles depending on what is needed. This is easier said than done with Jenkins or Gitlab. Given there is a Jenkins plugin for CodeBuild (or is it vice versa?) perhaps this could be useful in reducing the permissions you need to give to Jenkins slaves, which can be a challenge.

Lastly, the fact that full CloudWatch Logging is available out of the box and that build jobs can be controlled through the CLI and API this would allow you to implement some interesting security monitoring contraptions in Lambda (we all love Lambda contraptions, right?) to detect and respond to malicious build activity.

References

To figure things out I used the following references: