DIY “Home NSM” Stream Processing with Suricata and a Raspberry Pi

This week, my last Raspberry Pi-related Amazon order arrived. It was an ArgonOne V2 case for a 2GB Pi4 I bought earlier in the week that…

DIY “Home NSM” Stream Processing with Suricata & Raspberry Pi

This week, my last Raspberry Pi-related Amazon order arrived. It was an ArgonOne V2 case for a 2GB Pi4 I bought earlier in the week that my wife had suspiciously looked inside the Micro Center. This is the last of the NSM sensors I started building over the holiday break. Over the years, I’ve been no stranger to IDS and NSM. I got my start in tech in the San Antonio (and Austin) network security community the birthed some of the original IDS products. At Mandiant/FireEye I couldn’t help but be surrounded by the Bro crowd when I worked on TAP. At Cisco and Digital Bond, I contributed to multiple SCADA Honeynet Projects. At Digital Bond and SAIC, I used Snort to develop and deploy IDS signatures for a few SCADA projects, but over the years I’ve always liked Suricata.

Why Suricata?

Although I first used Snort in the late 90s, Suricata has been my go-to NSM tool since the mid 2010's. It may lack the flexibility of Zeek and I really haven’t gotten much use out of it’s IDS signatures, but I picked it for building out Raspberry Pi4 sensors for a few (what I think) are good reasons:

- Up to Date Docker Images — using docker-suricata I was able to get up and running on Intel and 32/64 -bit ARM immediately. Forgot about compiling anything as large as Zeek/Snort/Suricata on low-end hardware and even on an AWS Gravitron instance, it takes a bit of time. Don’t bother. There are up to date builds and Jason Ish is super responsive if you find a bug. This also makes it super easy to deploy on any system that has Docker to monitor a single endpoints or a bridged interface used by LXD or KVMs.

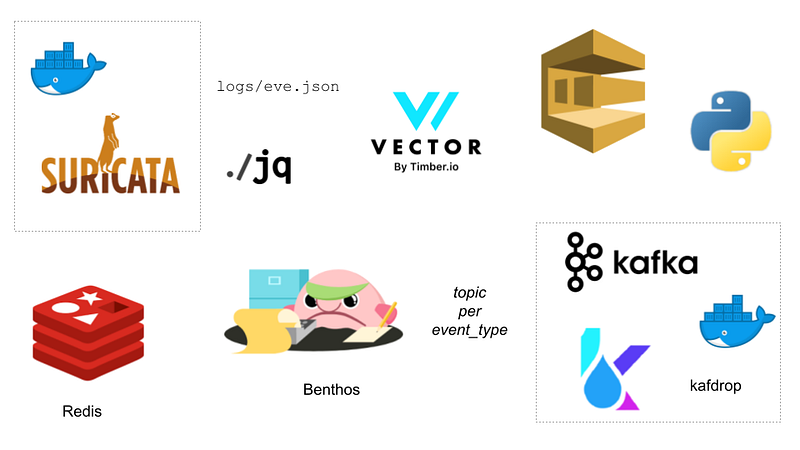

- Native JSON Logging makes it easy to process and ingest event streams or parse with jq. Since Suricata has built-in Redis support, you can log to memory and then process the events with Benthos which is really handy and I’ve only begun to scratch the surface on. @Jeffail is also very helpful on the Benthos Discord server if you run into configuration issues, like when I didn’t have my Kafka broker configured properly.

- Good enough protocol support that includes the most common protocols for gaining instant visibility into your network for HTTP, TLS, DNS, and Netflow session data.

Building Event Pipelines

In the mid 2010s, I had the good fortune (or misfortune, I thought on days when we couldn’t figure out how to keep pre-1.0 Elasticsearch stay green) on a large cloud-based SIEM that processed insane amounts of security logs. This platform was a polyglot-microservices gone wild architecture that had dozens of EC2 instances that ingested, parsed, and decorated security event streams for our customers. What is amazing now is the capability of small tools like Vector and Benthos that allow similar sorts of pipelines to be created for easily capturing, routing, manipulating and ingesting events into a variety of cloud services and messaging systems like Kafka, Redis, or NATS — all in very small binaries that run on 1–2GB systems. These tools are a great way to learn the fundamental concepts of stream processing with little to no coding.

In less than 10 lines of YAML I pulled messages from Redis and put them in a different topic by Suricata event type on a Kafka cluster. With the exception of Kafka & Zookeeper, I ran all these tools on 64-bit Ubuntu Linux on 1–4GB Raspberry Pi3B’s and Pi4Bs. Kafka will run on ARM, but I couldn’t find any up to data Docker images, so I ran that on Intel.

input:

redis_list:

url: tcp://localhost:6379

key: suricata

output:

kafka:

addresses:

- 192.168.2.210:9092

topic: 'suricata-${! json("event_type") }'

client_id: benthos_kafka_output

Once you get them in Kafka you can view with Kafdrop or use kafkacat to ensure your cluster if functioning.

Next, you can begin to write consumers in your language of choice to process these messages. I also tested routing messages to SQS if you don’t want to deal with running a broker on your network. Benthos and Vector both support a variety of cloud options that could also use to write Serverless code pipelines.

Hardware/OS Options

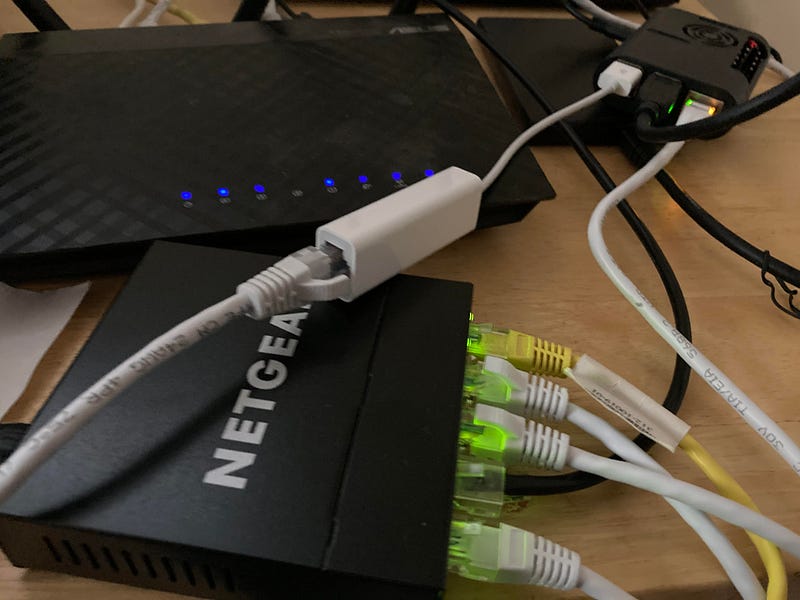

For monitoring traffic I’m using a combination of inline/bridged NICs (either PCI-E on Intel hardware or USB-3 on the Pi’s) or monitoring a span port on a NetGear GS305E managed switch. Although this setup works on a 1GB RAM Raspberry Pi 3B+, I recommend the 2GB Pi4B as the minimum. This should be more than adequate to run Suricata and Redis and Vector/Benthos as needed with plenty of RAM to spare. Ubuntu 20.04 has been rock-solid and I’ve been running that standard on all my Pi3B+ or better so I can get a consistent OS which what I’m used to using in AWS. Also being able to have the same distro for a beefier AWS aarch64 instance is helpful — as well as full support of Docker and LXD — is a big plus. Say goodbye Raspian in 2021!

Depending on how much you want to spend on cases for your Pi4, you can go as low as $8 MazerPi and up to $25. Well, actually there are some for $50 that you CAN get but I can’t stomach that, even for a 8GB Model. The new Argon One v2 or the Argon Neo with “fanhat” both end up being $25 and you will definitely need to use a heat sink and decent fan because I may or may not have burned out the first Pi4 I bought a year ago. Definitely install the fan-control software. It just worked and they run a lot quieter if you allow them to adjust for the CPU temperature. The cheaper MazerPi do work and are easier than some of the other Pi or Jetson Nano cases I haven’t figured out how to assemble and had to return to Amazon.

What Next?

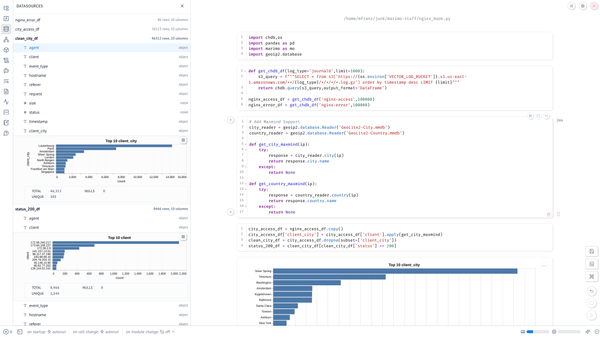

All the code, configurations, and even some documentation is available in the nsm4home Gitlab project and I’ve been continuing to improve it in 2021 on weekends and evenings. Ultimately, my goal is to use this project for generating datasets to continue to improve my data engineering and Python data coding skills. I just can’t get into the bland data sets available on Kaggle. Also doesn’t hurt to keep an eye on what my kids are doing on the network or the occasional APT!